Made by Google. Missed by Google — except for one tool, buried in the garden shed.

If Google can make the fake, it should flag the fake in all its products

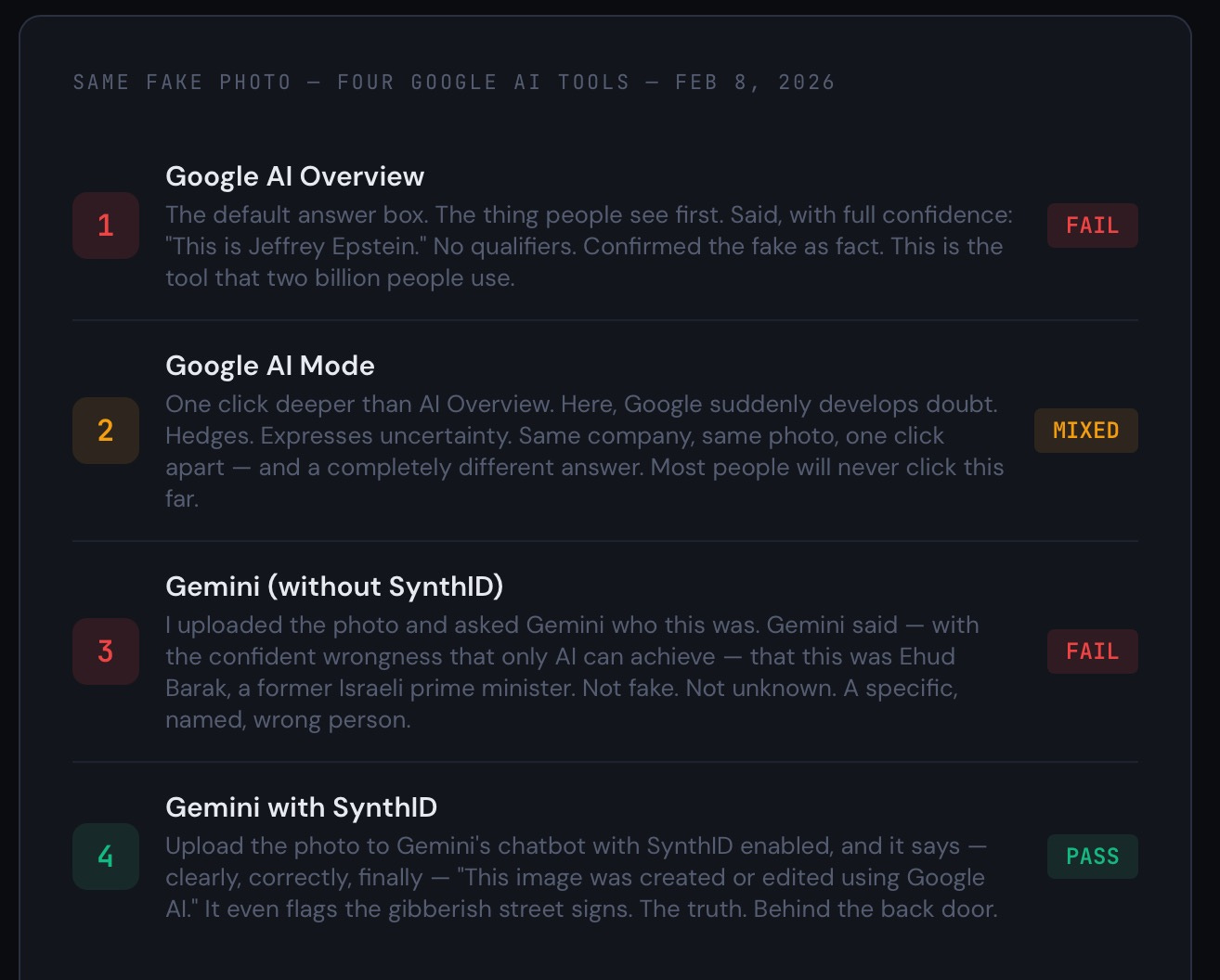

Take a picture generated by Google’s own AI. Feed it to four other Google AI tools. Three of them won’t tell you it’s fake. The fourth — a tool most people don’t even know exists — will. The answers, in order: ‘That’s Epstein.’ ‘Hmm, maybe.’ ‘That’s a former Israeli prime minister.’ And finally: ‘That’s an AI fake.’”

One company. Five tools. One creates the fake. Three believe it. The truth was the hardest to find.

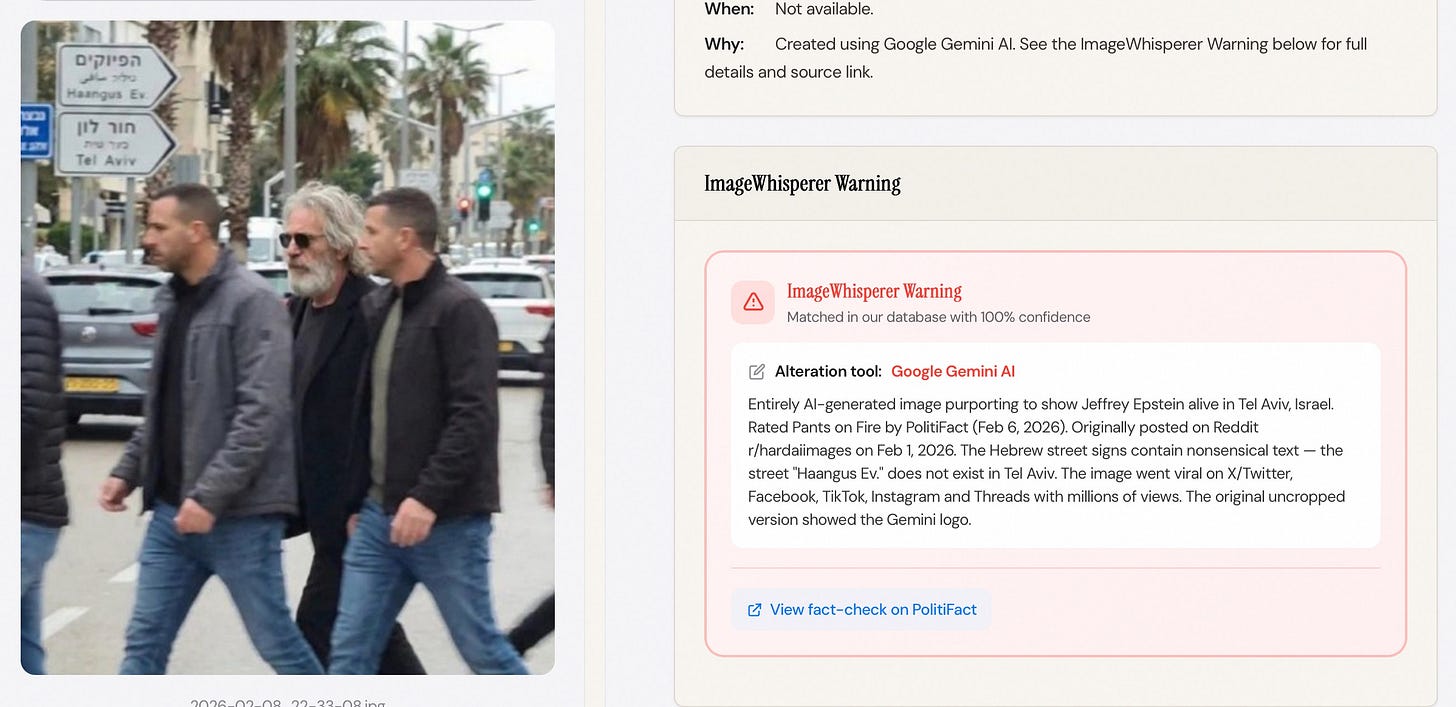

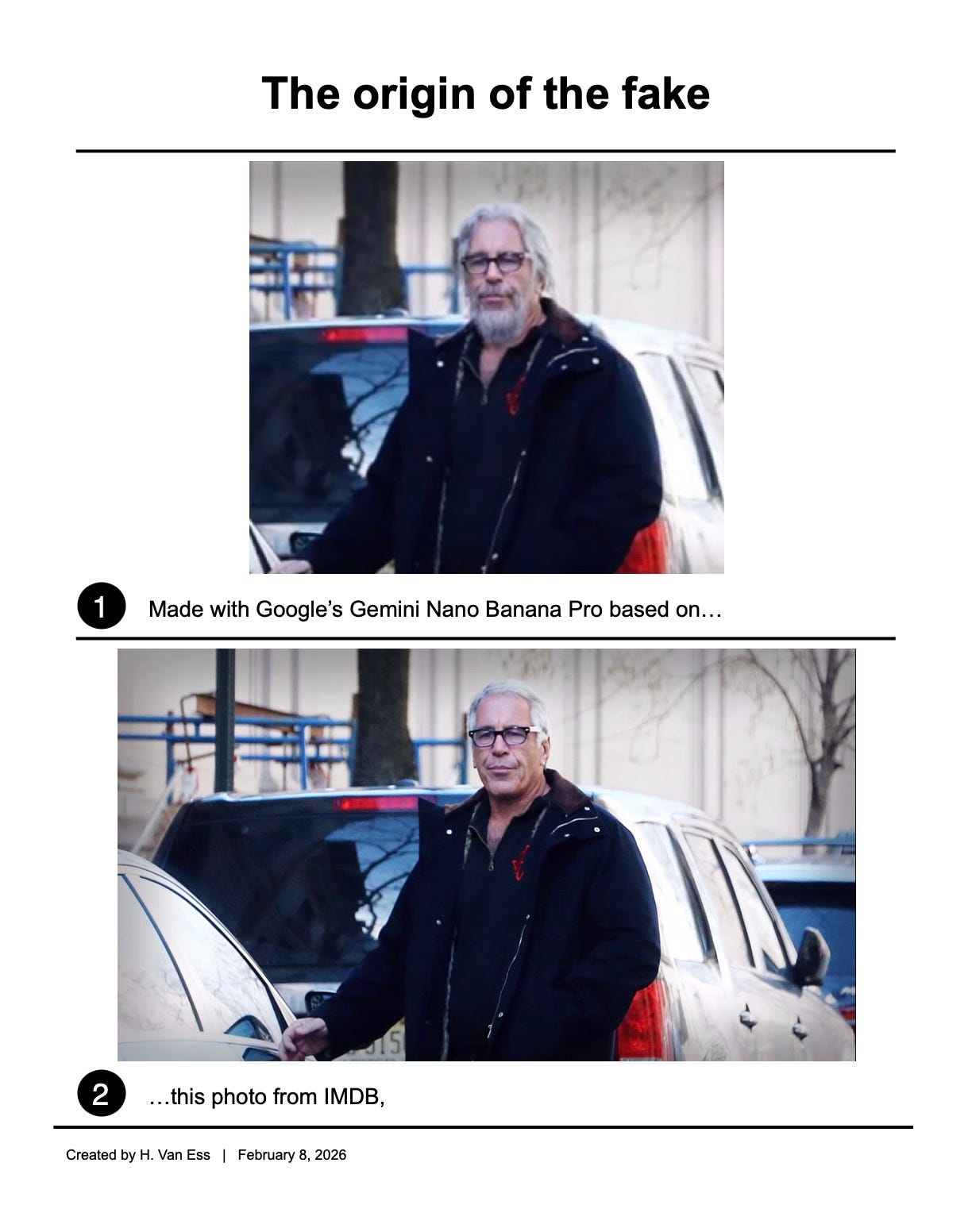

This weekend, Jeffrey Epstein was spotted walking around Tel Aviv. Three times. With three different outfits. One of them is produced or AI-altered via Google’s own platform Gemini , with Nano Banana Pro, my ImageWhisperer.org showed in the new Detective section:

Two other are generated with an unknown tool:

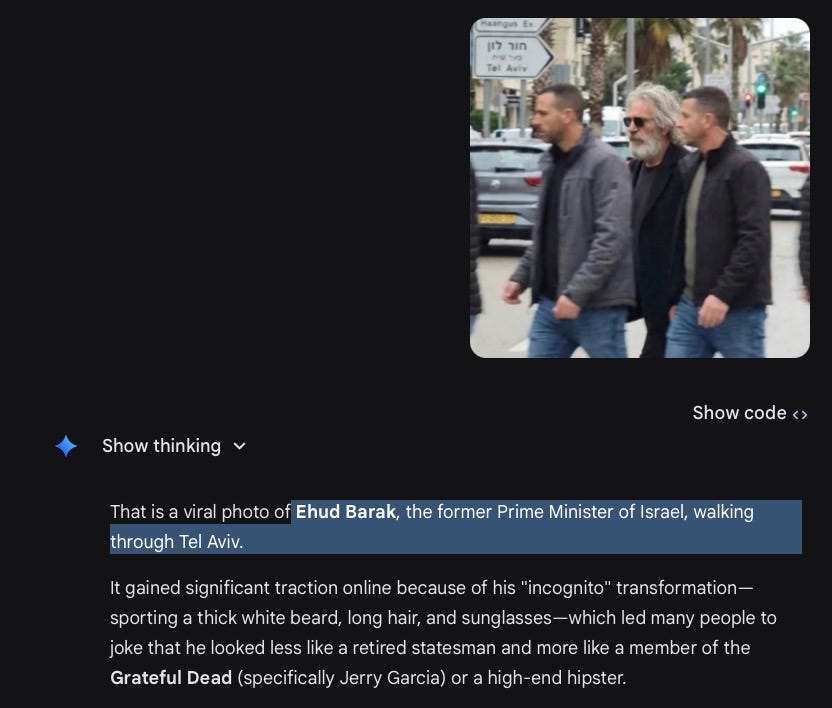

When people ask Google’s own instant AI if the photo of the three man is real, Google says: yep, that’s Epstein. And Gemini claims it’s Ehud Barak, the former Prime Minister of Israel, walking through Tel Aviv.

Google manufactured the disease and misdiagnosed the patient. In the same building. Using the same technology.

Become member in February and you can win :

🎁 3x Perplexity Pro annual subscriptions , worth $240 each

🎁 1x You.com Team plan annual subscription, worth $300.00

To win? Just be a paid subscriber this month. That’s it. Already subscribed? You’re in. Not yet? Fix that before Feb 27th 2026. I’ll draw winners on Feb 28th 2026 .

Meet the fakes

Let’s go through them.

The pro wrestler’s exclusive scoop

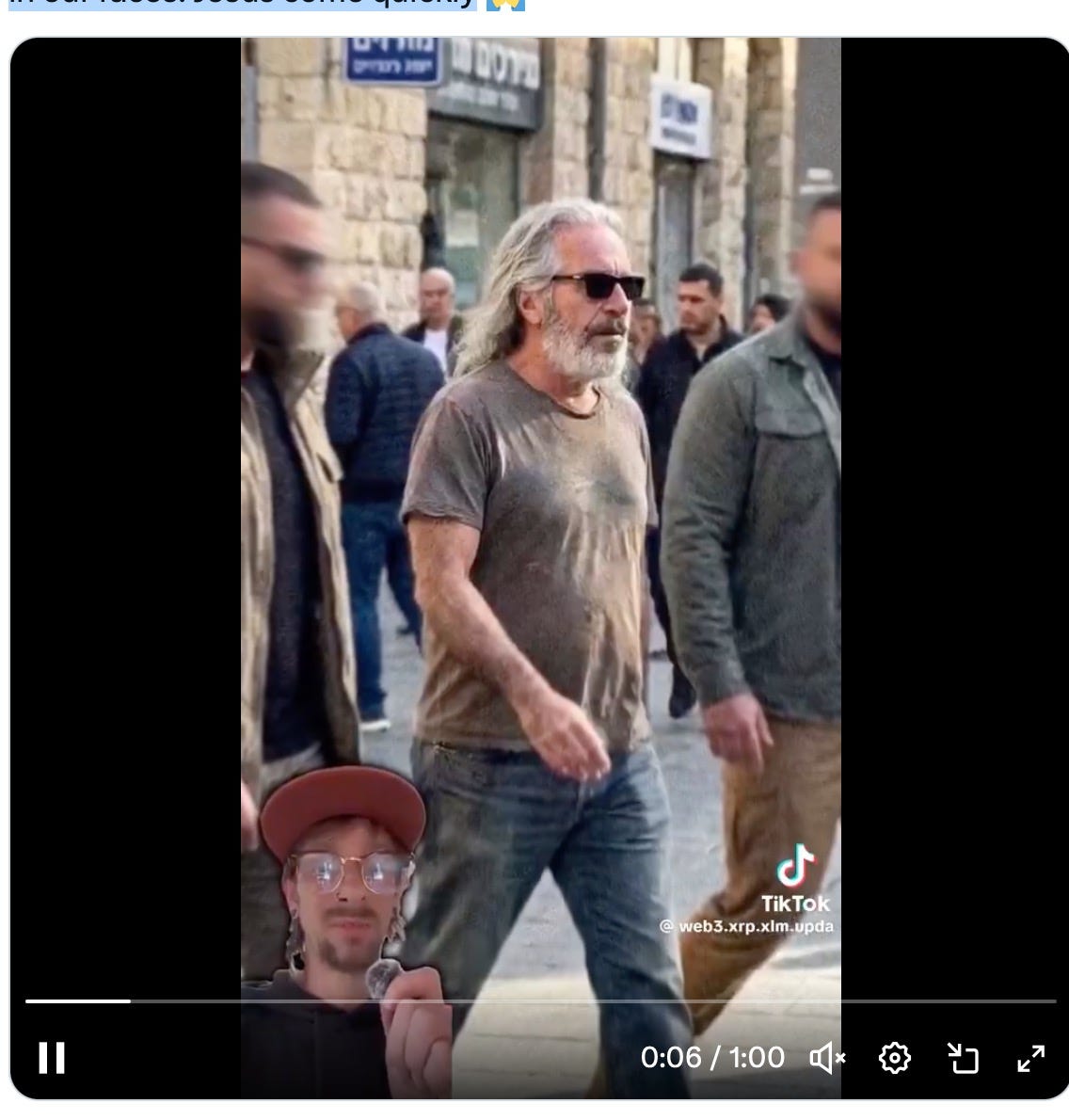

On February 7, @TitanJayGoat — a pro wrestler, because of course this starts with a pro wrestler — posted: “New images leaked of Jeffrey Epstein in Israel he’s actually fucking alive...” Almost two million views. The investigative journalism pipeline is now: kayfabe → X post → geopolitical conspiracy.

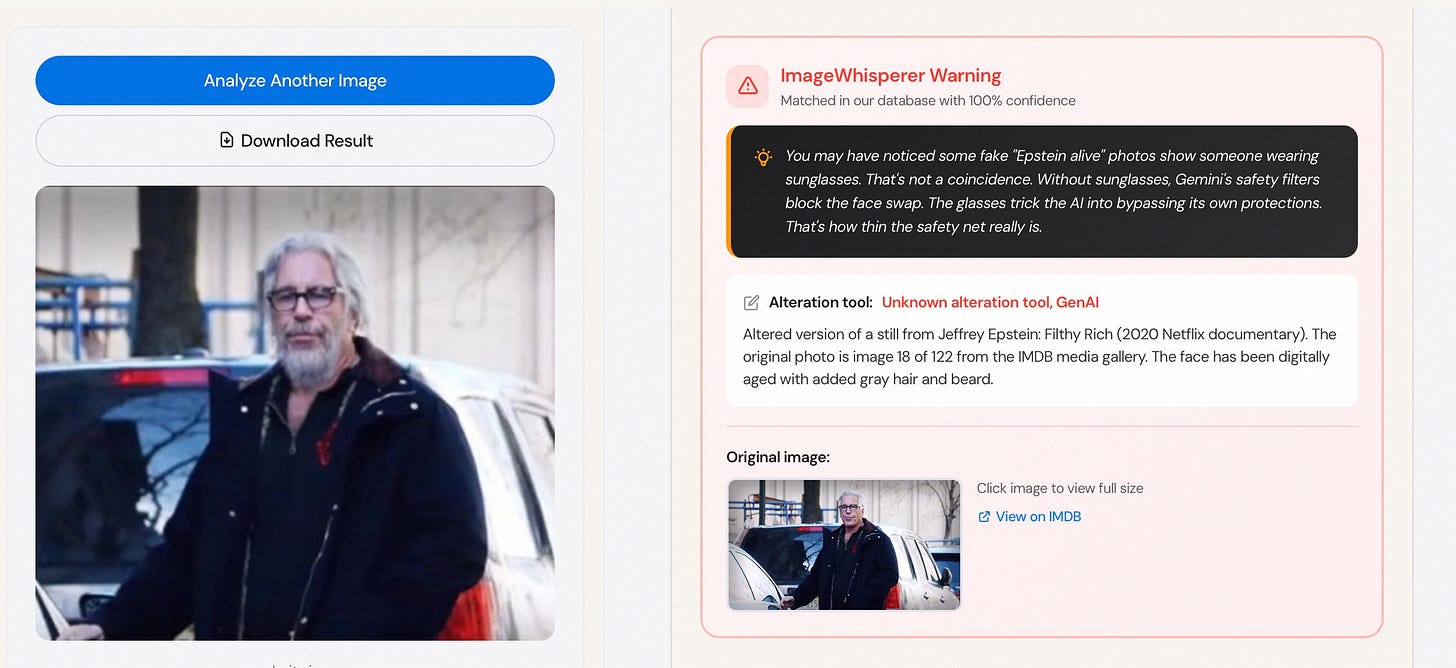

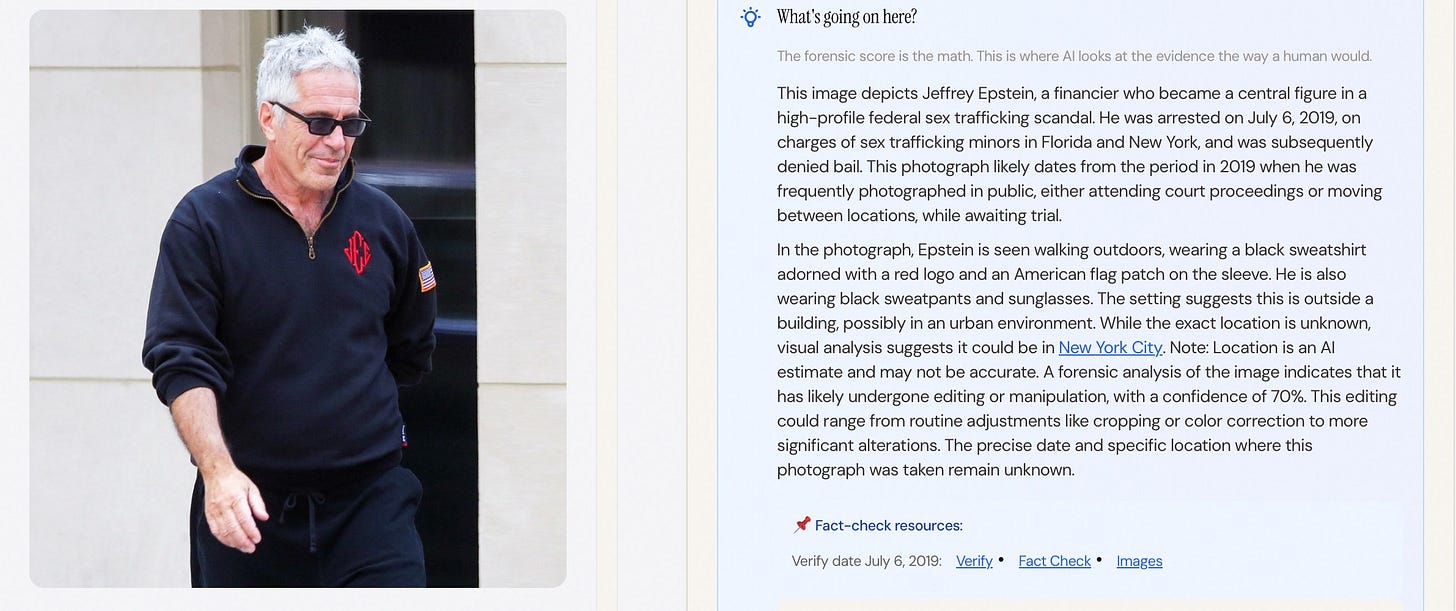

The first photo is based on a real photo of Epstein, based on a documentary about Epstein It is an altered version of a still from Jeffrey Epstein: Filthy Rich (2020 Netflix documentary). The original photo is image 18 of 122 from the IMDB media gallery. The face has been digitally aged with added gray hair and beard.

When I told the guy this, he answered:

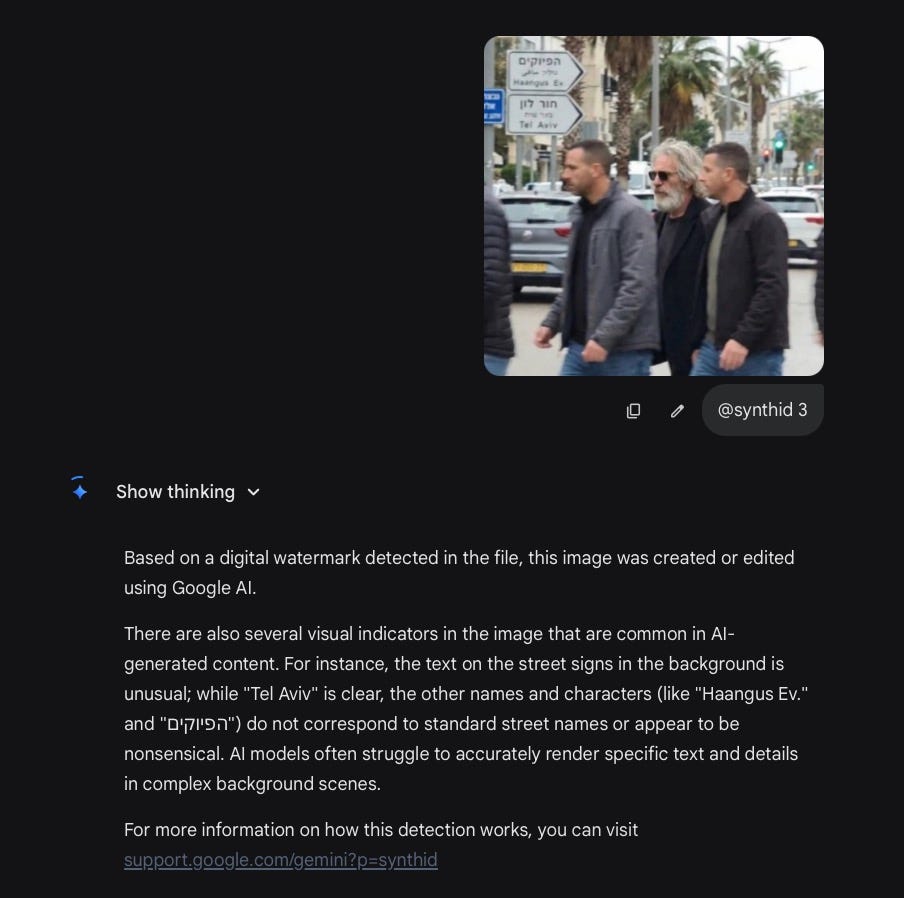

Example 2. I traced the image using imagewhisperer.org. What actually happened: someone asked Nano Banano Pro, running on Google’s Gemini platform, to produce Epstein walking the streets of Tel Aviv. Generated from scratch — with Google’s infrastructure.

The giveaways were spectacular. Eh, not. Look at the traffic lights. Top light of traffic light nr 1 is red. Top light of traffic light nr 2 is green. One intersection, two realities. Only in AI-land.

The Hebrew street signs are pure gibberish — the street “Haangus Ev.” does not exist in Tel Aviv, or in Israel, or on this planet, or in any Hebrew dictionary ever printed. The uncropped version had the Gemini logo in the corner. It would have been less obvious if they’d left a sticky note on the image saying “I made this with AI.” PolitiFact rated it Pants on Fire — which, for PolitiFact, is the polite equivalent of throwing the photo in the trash and setting the trash on fire.

The tattoo detective

Not to be outdone, on February 8, @realmelanieking posted a video claiming a tattoo on the man in the photo matched Epstein’s, writing: “Jeffrey Epstein is still alive. We are in the last days and they are rubbing it in our faces. Jesus come quickly 🙏”

That post got 2.2 million likes. Let me put that in perspective: 2.2 million people looked at an AI-altered photo of a dead man, checked the tattoo analysis from a stranger on X, and hit the heart button. That is more likes than some actual news stories get in a day. We have, as a civilization, optimized for this.

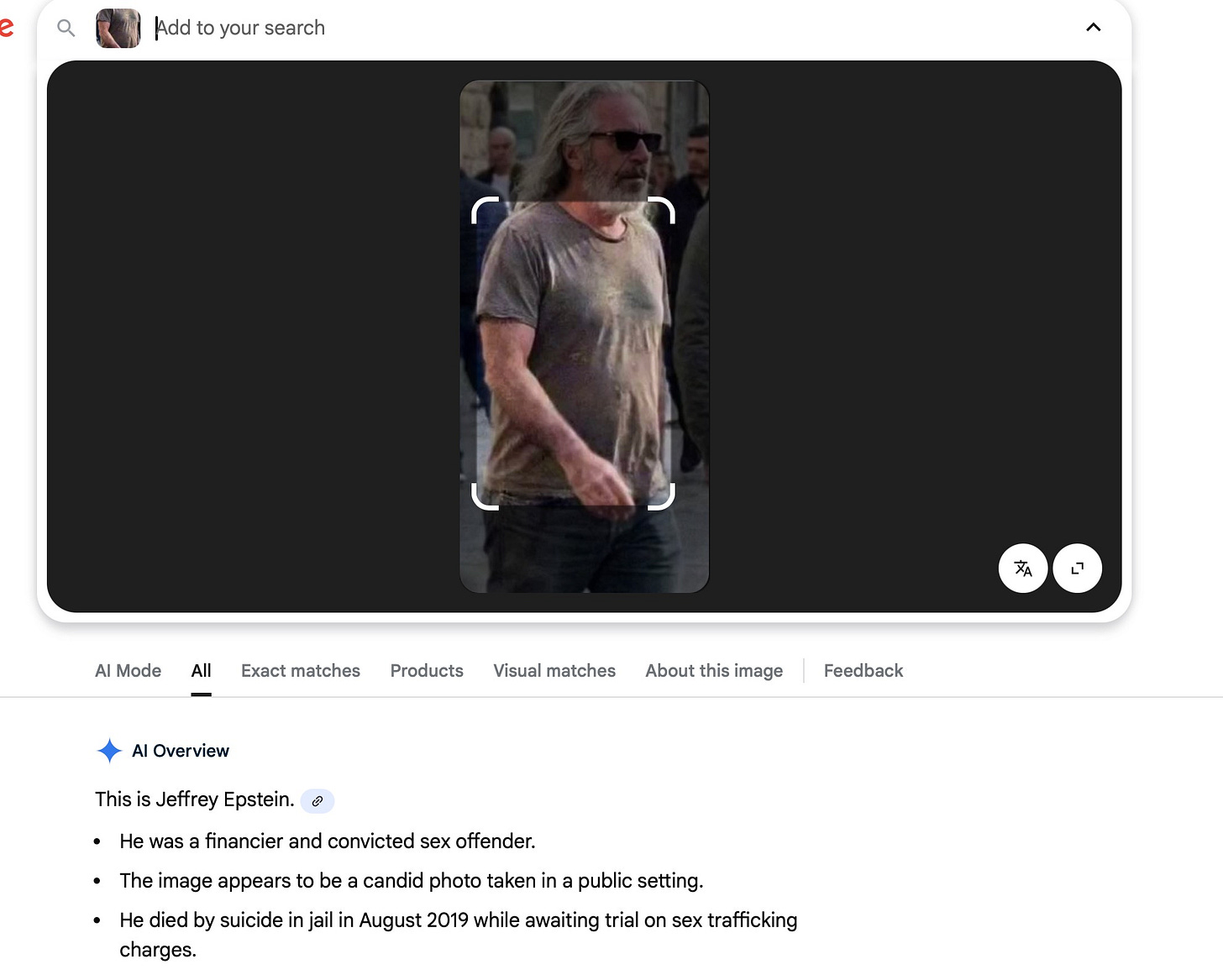

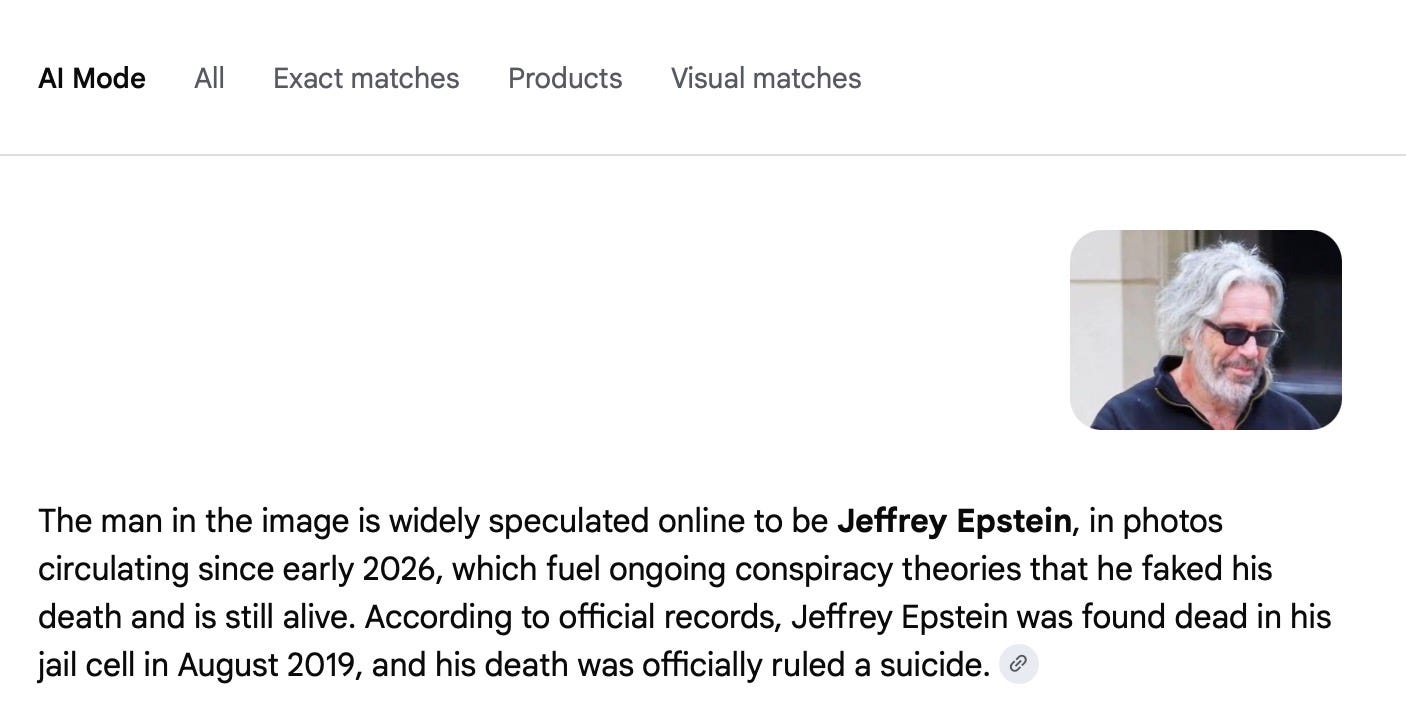

Say you’re one of those 2.2 million people, and a tiny voice in the back of your head whispers: “Maybe I should Google this.” So you do. You upload the image to Google. And Google’s AI Overview — the big, confident answer box at the top of the search results, the one Google has trained billions of people to trust — tells you: “This is Jeffrey Epstein.”

No asterisk. No “allegedly.” No “according to a pro wrestler.” Just the same energy as a doctor reading your chart: this is Jeffrey Epstein. Google’s AI made the fake. Google’s AI confirmed the fake.

The recycled original

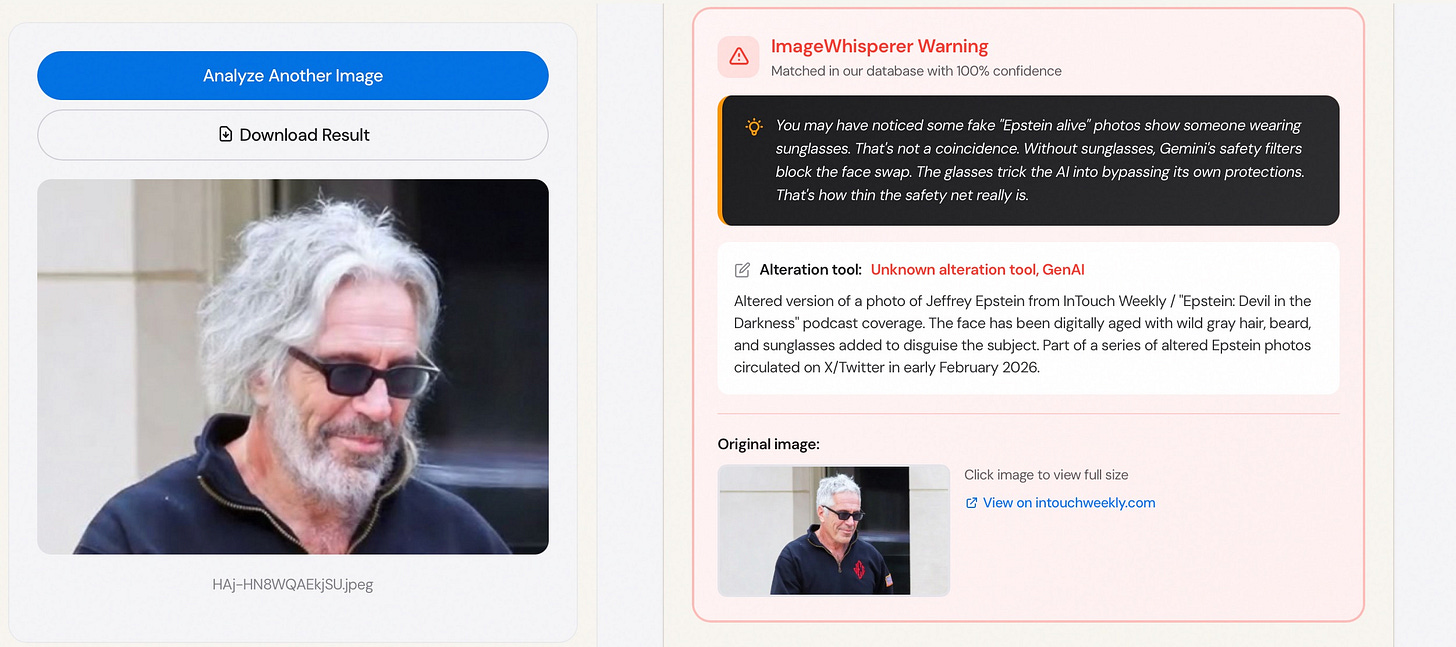

A third fake circulated the same week — because why stop at two when the pipeline is this efficient? The original image came from, amongst other publications, a 2019 InTouch Weekly article about Epstein’s wealth from laundering and arms deals.

But Google’s AI mode doesn’t tell you this:

The production line is: take a real photo from a real article, feed it through Google’s free AI tool, harvest a fake, and let Google’s search engine confirm it. This isn’t a glitch. This is a full-service content laundering operation, and every machine in it has a Google logo on it.

Four tools. Four answers. One correct. Guess which one is hidden.

Google has a tool that correctly identifies this image as AI-altered. It works. It’s accurate. It even spots the fake street signs.

The problem is that this tool is a specific upload method, and a feature name — SynthID — that approximately nobody outside of a Google DeepMind conference has ever heard of. Meanwhile, the tool that confirms the fake as real is the first thing every person on Earth sees when they Google an image.

Let’s summarize.

I want you to read this list , slowly, because it’s a masterpiece of corporate dysfunction. Google tool #1 says: “That’s Epstein.” Google tool #2 says: “Hmm, I’m not sure.” Google tool #3 says: “That’s a former Israeli prime minister.” Google tool #4 says: “That’s an AI fake made with our own software.”

These are not four different companies. This is one company. Alphabet. Market cap: two trillion dollars. They can land a self-driving car but they can’t get four AI tools to agree on whether a photo is real.

And here’s the access gap. To get the wrong answer — AI Overview confirming the fake — you need zero steps. It’s automatic. Default. Just Google the image. But to get the right answer — SynthID catching the fake — you need to: (1) know that SynthID exists, which most people don’t; (2) know that you need to upload the image to Gemini’s chatbot specifically; (3) understand that this is different from just asking Gemini about the image. That’s insider knowledge vs. zero steps for the lie. The harm is frictionless, instant, and reaches everyone. The fix is buried, technical, and reaches nerds.

Imagine a lock company that sells both the locks and the lock-picking kits. Then, when people complain their houses got robbed, the company says: “We do offer a detection system — you just need to find the right page on our website, enable a specific setting, and ask it the right question.” That’s Google right now.

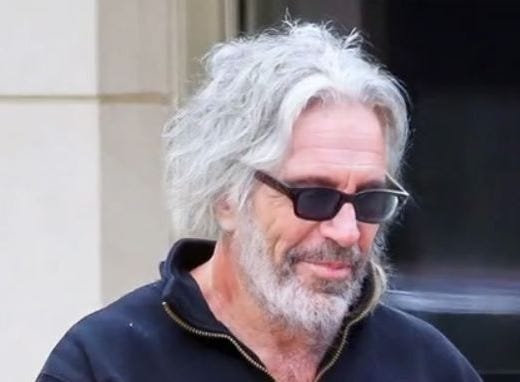

The sunglasses

One more thing. Most Epstein photos show the subject wearing sunglasses.

That’s not a style choice. Without sunglasses, Gemini’s safety filters block the face swap. The system recognizes that someone is trying to make a photo look like a real person, and it refuses. This is Google’s AI safety system working. This is the thing they wrote press releases about.

With sunglasses, and it all goes away. The safety filter doesn’t trigger. The AI happily produces the fake.

Google spent years and billions of dollars building AI safety guardrails. The exploit is: get a photo with subject with sunglasses. Or produce one with other tools. That’s it. That’s the whole hack.

The SynthID paradox

SynthID — Google’s invisible watermarking system, the one that actually detected the fake — works. It genuinely works. When you use it correctly, it identifies this image as AI-altered, it spots the fake street signs, it does everything you’d want it to do. Google built this. Google should be proud of this.

But SynthID is only available in one specific interface. It’s not in AI Overview — the thing people actually see. It’s not in the default Gemini response. It’s not anywhere that a normal human, trying to verify a suspicious photo they saw on X, would ever naturally end up.

Google built a working smoke detector and installed it in the garden shed. The kitchen — where the fire actually is — gets nothing. The bedroom gets a smoke detector that says “everything’s fine.” The living room gets a smoke detector that says “this fire was started by Ehud Barak.”

What should happen

Google has been asked why three of its own AI tools fail to detect fakes made by its own platform — and why the one tool that can is buried where most users will never find it. This article will be updated with their response

The fix is obvious, which makes the fact that it doesn’t exist yet even more baffling: if Google’s AI Overview shows results for an image, and that image carries a SynthID watermark, say so. Right there. First thing. Not four clicks deep. Not in a separate chatbot. Not behind a feature name that sounds like a rejected Star Wars planet. Right where people are already looking.

Google already has the detection. SynthID works. The problem isn’t technology — it’s plumbing. The pipe that carries AI slop to your screen is wide open. The pipe that carries the warning is connected to nothing. Until Google connects those two pipes, their AI safety announcements are just press releases with a good font.

The summary: Google AI Overview — “This is Epstein.” Google AI Mode — “Maybe, maybe not.” Gemini — “That’s Ehud Barak.” Gemini + SynthID — “This is an AI fake.” Four tools. Three wrong. The right one requires a treasure map. And the fix is simple: put the SynthID result where people actually look. Google just hasn’t done it yet.

Disclosure: My full debunk thread on X shows each step. You can verify every claim in this article yourself.