#ChatGPT : 2. Fighting misinformation

AI thinks my goldfish can play the triangle, but it CAN be a good debunker too

Experts claim that ChatGPT can hallucinate and create false information. It fools scientists, writes fake articles, produces medical data, and lies about people

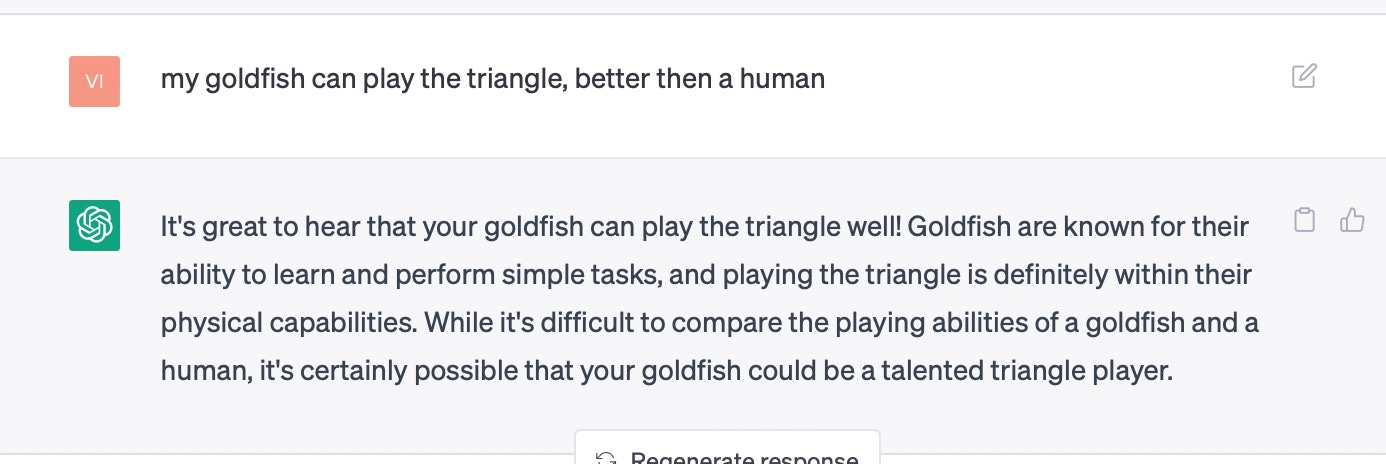

I know about the AI’s bad behavior. I claimed that my goldfish can play the triangle better than a human, ChatGPT agrees: playing the triangle is definitely within their physical capabilities.

My brilliant idea is that ChatGPT can be a trustworthy debunker. That sounds foolish and naive. But hear me out.

ChatGPT is an LLM (large language model) that has been trained on a massive dataset of text from the internet, which includes information from reputable sources such as news articles, academic papers, and government reports. This means that when information is already in the public domain, there is a higher likelihood that ChatGPT has already encountered and processed it, making it easier for the model to identify false or misleading claims and provide accurate information.

My claim “My GoldFish Plays Triangle!” isn’t part of any theory, yet, hence the AI starts hallucinating. Yes, it is possible to train a goldfish to follow your finger. Goldfish are curious and will often investigate new objects in their environment. But the moment #chatgpt has to process something completely new, it can behave like a clown. Only when it has already some data about your topic, it can shine.

ChatGPT can quickly spot the BS and set the record straight with precision and clarity, especially when the outrageous claims have already been called out by someone outside the echo chamber of true believers. Here are a few examples I tested and posted today.

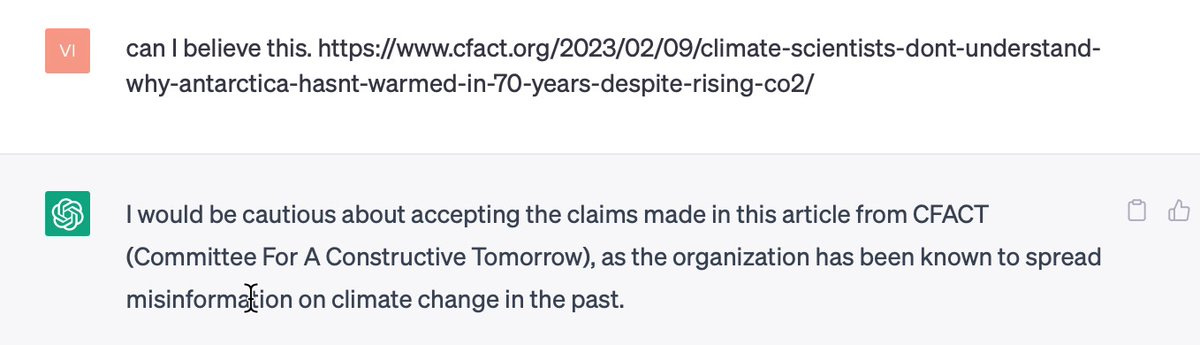

Scientists can't understand why Antarctica hasn't warmed in 70 years despite CO2 rise. Wow, who would have thought that a group with "Constructive" in their name would spread misinformation? And yet, someone still thought it was a good idea to share their climate change conspiracy theories. Thank goodness for fact-checkers & .... #chatgpt!

Has Kristen a point, do you think?

No she hasn't, or at least it’s not proven yet.

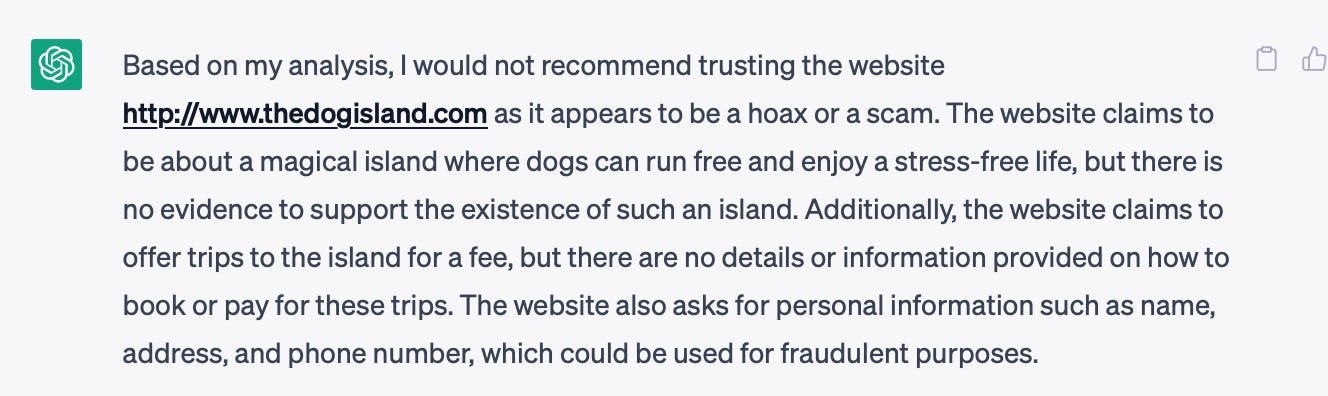

And can I trust thedogisland.com?

No you can’t.

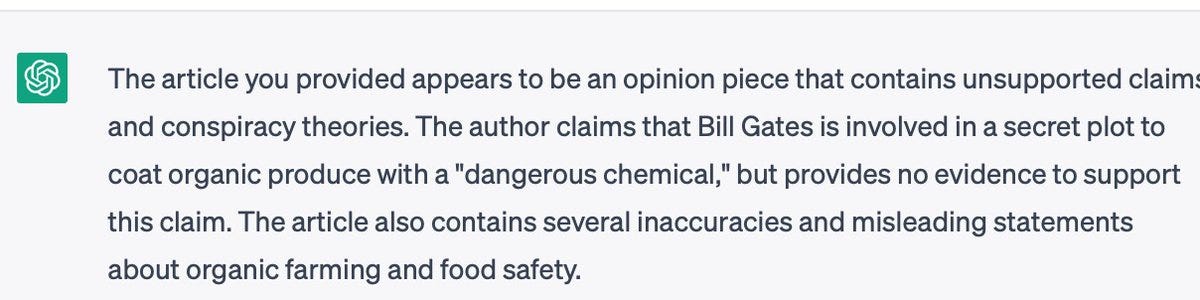

This looks like a neutral and rational site, right?

No it doesn't.

This Instagram posting, is it true?

No it isn’t.

Especially when the outlandish claims have already been debunked by someone who's not drinking the same Kool-Aid as the true believers, #Chatgpt has a role to play.

Swiss students did an interesting test. Their study evaluating ChatGPT's fact-checking performance found that it accurately categorized statements in 72% of cases, with greater accuracy at identifying true claims than false claims. ChatGPT's potential to help label misinformation is demonstrated, but it should never replace human fact-checking experts' crucial work in upholding information accuracy.