Why AI detection fails on the fakes that matter most

Total fakes are easy to spot. Hybrid fakes slip through. How to fix that?

Last Thursday, AI-generated videos of a snowstorm in Kamchatka, Russia went viral. My own tool, ImageWhisperer, flagged them. BBC Verify quoted my analysis. Newsrooms on three continents had been fooled. Then people came to test my tool — and discovered some serious flaws.

Hybrid fakes got missed entirely. Obvious fakes get scored as “maybe.” Why does this happen? Most AI detectors work like calculators — they output a number. They need to work like detectives — really look at the evidence.

Here’s what’s broken and how to fix it.

The test that exposed the problem

Kamchatka, Russia. The peninsula had just experienced its worst snowstorm in decades — over two meters of snow, a 146-year-old record shattered. But while the real event was genuinely remarkable, hundreds of AI-generated videos appeared that exaggerated the already impressive reality.

Richard Irvine-Brown of BBC asked me: Could you have a look over these two videos, supposedly from Kamchatka, Russia? Obviously, they're AI-generated, but I wondered what you made of them? What would you say are the most obvious indicators?

There were many signs the videos were fake. A town of 160,000 people doesn’t generate dozens of drone shots and high-end camera angles during a blizzard. The videos showed apartment buildings with 10 or more floors — but Google Maps shows Petropavlovsk-Kamchatsky barely has buildings taller than 4 stories. Some videos showed people sledding down massive snowdrifts at impossible speeds, ignoring the basic physics that you sink into snow, not glide on top of it. And few people know what Kamchatka actually looks like, so the fakes weren’t flagged quickly.

Besides that, my tool, ImageWhisperer, flagged them as AI-generated. BBC Verify included this in their reporting. I wrote about it on LinkedIn, noting how newsrooms in Panama, Mexico, and Poland had run the fake videos as real footage — some of which the creators had openly tagged as AI-generated on TikTok.

This is like a bank robber wearing a t-shirt that says “I AM ROBBING THIS BANK” and the security guard waving him through because he seemed confident.

Then traffic to ImageWhisperer spiked. People came to test it. And they found problems.

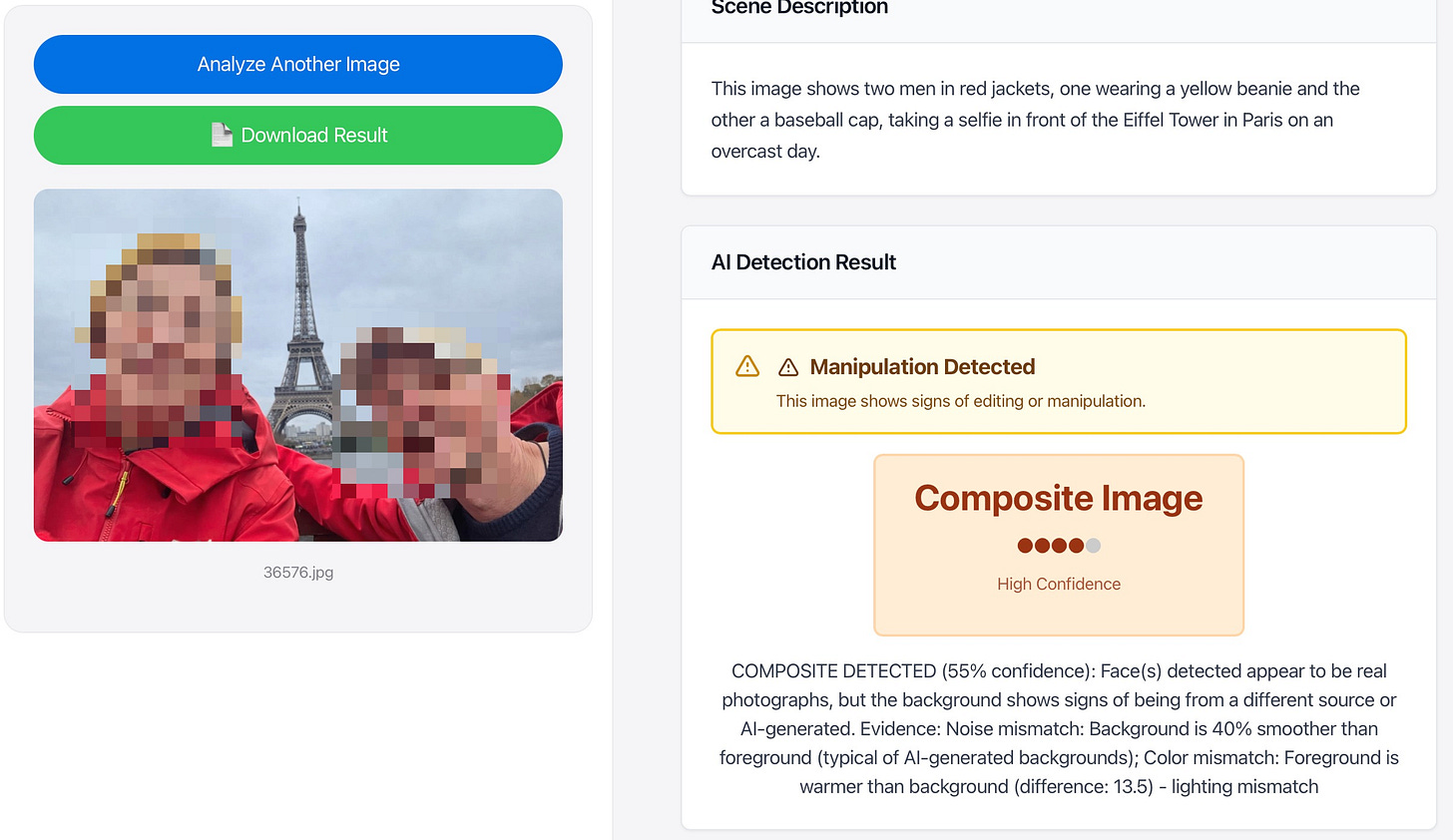

Software engineer Ronan Le Nagard pasted himself in front of the Eiffel Tower with a friend— real persons, fake background. My tool said it was authentic.

This is what we in the verification business call “not great.”

Think about why. Le Nagard’s image wasn’t fully fake. He and his friend were real. He just wasn’t actually in Paris. The detector was looking for a 100% synthetic image and found a 100% real person — so it shrugged.

It’s like a counterfeit detector designed to catch bills that are completely fake — wrong paper, wrong ink, wrong everything. But what if someone took a real $20 bill and replaced Andrew Jackson’s face with their own? The paper is real. The ink is mostly real. Only one part is wrong. That’s the fake that slips through often.

And it's getting easier. Google's Nano Banana Pro, ChatGPT's image editor, and Grok on X all let anyone seamlessly edit real photos — swap backgrounds, change faces, alter details — with results that look authentic. The AI handles lighting, edges, and blending automatically. What used to require Photoshop skills now takes a text prompt. The tools that make hybrid fakes are improving faster than the tools that detect them.

When the algorithm misses what eyes can see

That same day, I got an email from Denis Teyssou, an AFP journalist who works on the InVID-WeVerify plugin — one of the most respected verification tools in journalism, with over 155,000 users on the Chrome store. When Denis sends feedback about your detection tool, it’s like getting a note from the head chef saying your soufflé collapsed.

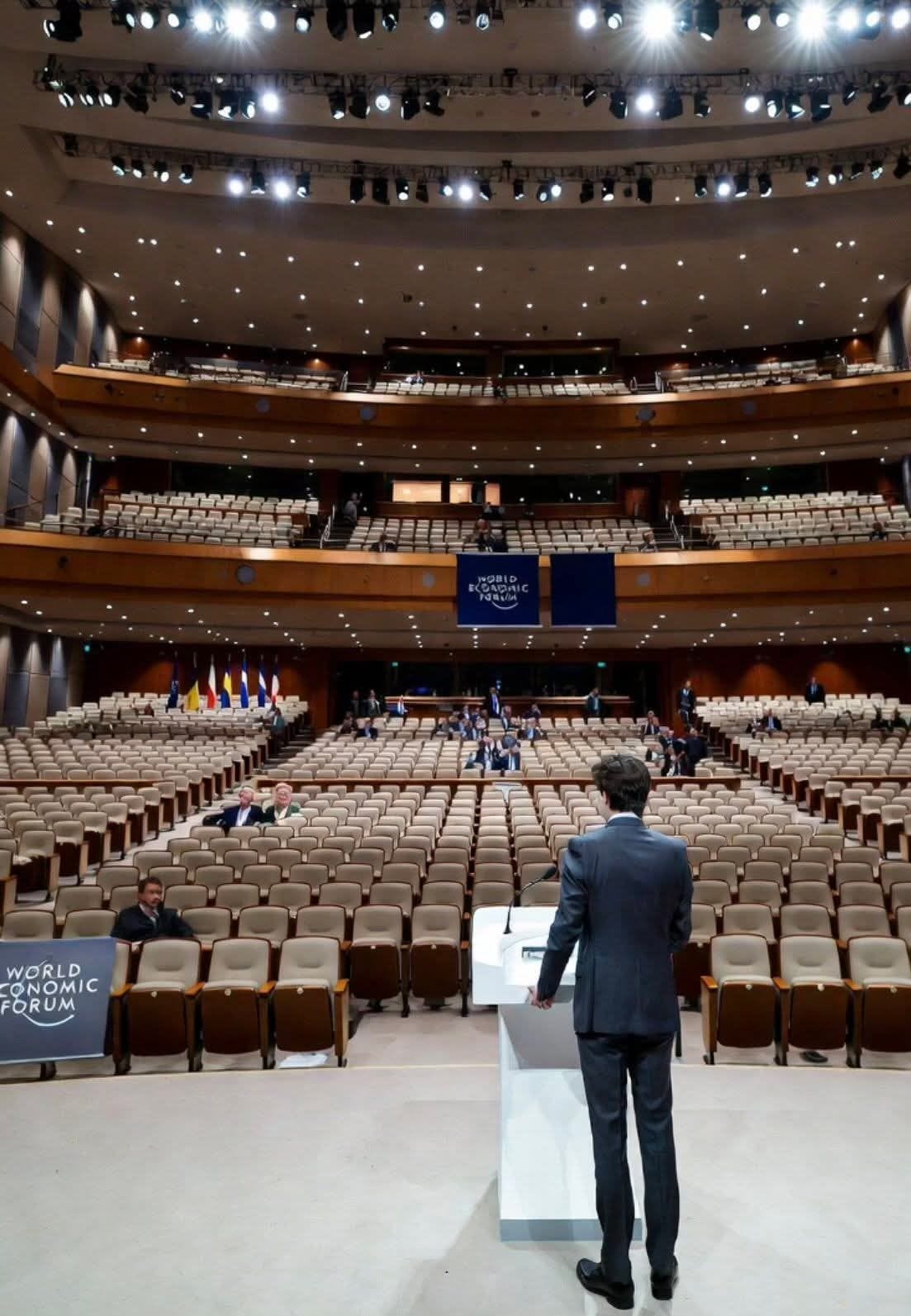

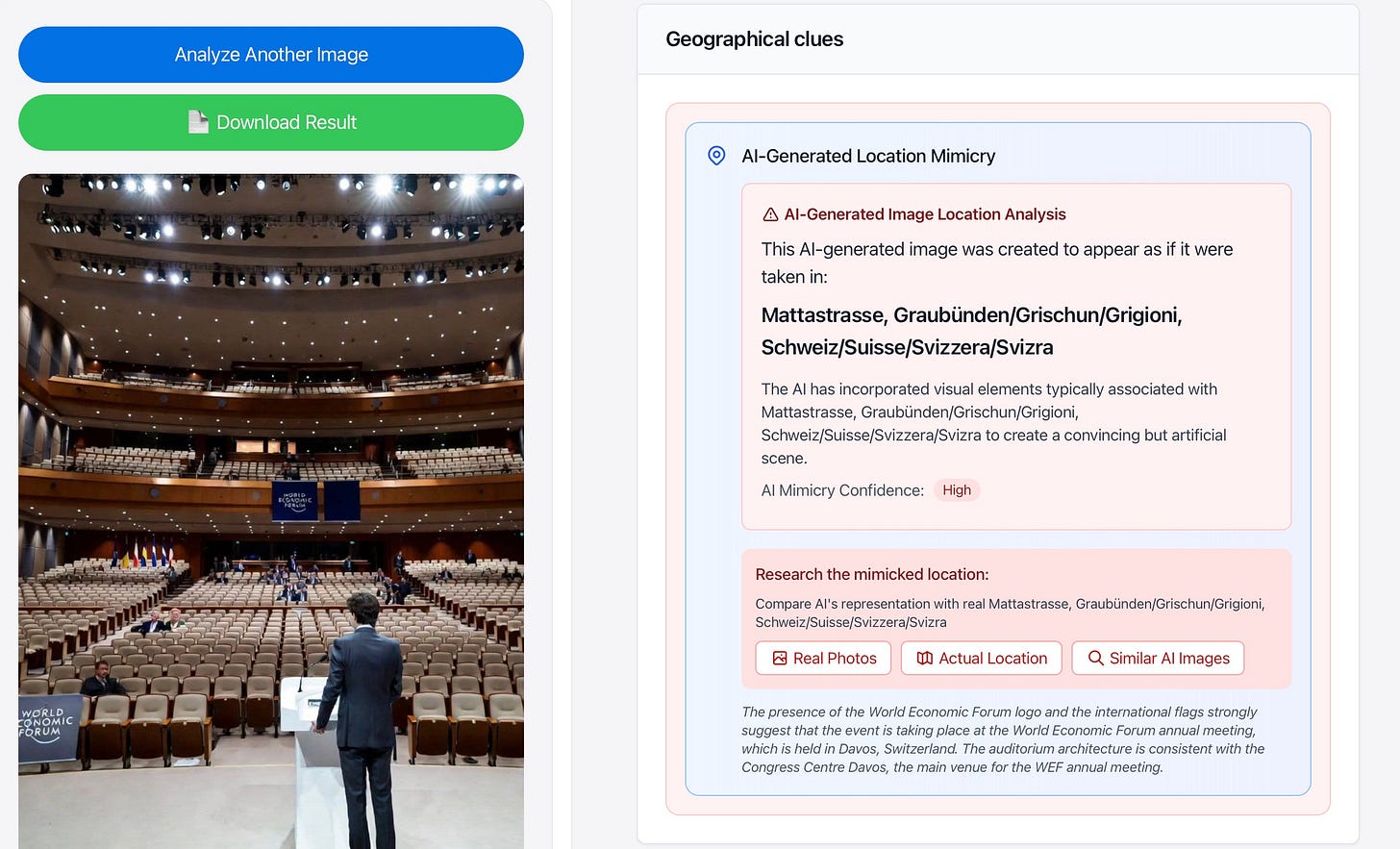

He’d been analyzing a fake image of Canadian Prime Minister Justin Trudeau at the World Economic Forum in Davos. One detection model scored it at 69% — just below the 70% threshold for flagging something as fake. Two other models scored the same image at 94% and 99%.

So: one model shrugged, two models screamed. The system went with the shrug.

But here’s the thing: when Denis simply looked at the image, the faces in the background crowd were obviously distorted. Warped. Melted. Like someone had tried to draw faces from memory after being spun around blindfolded.

In 2024, AI was terrible at faces in crowds. It could do one face pretty well. But ask it to generate a crowd and you can get a horror show of merged eyeballs, impossible jawlines, and ears that look like they’re trying to escape the head they’re attached to. In 2026, the problem is almost gone. In videos, crowds still often move in perfect unison — every head turning at the same moment, like a dictator's dream parade.

A human could see this instantly. Most AI detectors can’t — because they are too busy doing math. They work by analyzing pixel patterns and statistical signatures — they output a probability score, not a judgment. They don't "look" at an image the way you do. They don't notice that a face has three ears or that everyone in the crowd blinks at the same time. They just do math. And when the math says 69%, they shrug — even when the evidence is staring you in the face.

The rat in the kitchen

Imagine you’re a health inspector with a checklist. A restaurant needs to score above 70% to pass. This restaurant scores 69%. Close enough, you might think.

But then you walk into the kitchen and see a rat wearing a tiny chef’s hat, cooking the soup.

The checklist didn’t have a question about rats in chef’s hats. It’s not one of the criteria. Mathematically, the restaurant only loses a few points for “pest control.” But any human with functioning eyes would say, “I don’t care what the checklist says — that rat is running the kitchen.”

That’s what happened with the Trudeau image. The checklist said 69%. The human eye said “there’s a rat in the kitchen” — faces that look like they melted in the sun.

My tool was thinking like a calculator when it needed to think like a detective. Calculators care about numbers. Detectives care about evidence.

The fixes

Both failures — Ronan Le Nagard’s Eiffel Tower composite and Denis’s Trudeau threshold issue — pointed to the same underlying problem.

For hybrid fakes: compare foreground to background

Every camera creates a specific pattern of tiny imperfections in photos — a “fingerprint” scientists call Photo Response Non-Uniformity (PRNU). If a photo is real, the entire image has the same fingerprint. But if someone pastes themselves from Camera A onto a background from Camera B, now there are two different fingerprints in one image. It’s like a crime scene where the DNA on the doorknob doesn’t match the DNA on the murder weapon.

I fixed it. ImageWhisperer now separates foreground from background and compares their noise patterns. It also checks edge consistency (pasted elements have unnatural edges), lighting direction (shadows should all point the same way), and compression artifacts.

For threshold failures: never trust a single score

The Trudeau image scored 69% on one model but 94% and 99% on two others. The old system went with the first model. The new system counts votes.

Think of it like asking three doctors for a diagnosis. If one doctor says “you’re fine” and two doctors say “you definitely have that thing,” you should probably listen to the two doctors.

Model 1 (LDM): 69% → “Inconclusive”

Model 2 (Bfree): 94% → “AI Detected”

Model 3 (Bfree): 99% → “AI Detected”

Old verdict: “Inconclusive” (trusted Model 1)

New verdict: “Likely AI — 2 of 3 models detect with high confidence”I added a sort of “detective” to the tool: it now queries four different AIs to check for anything suspicious in the photo. Most of them caught the warped faces, so I've incorporated that into the verdict. I also ask the models to predict where the creator wants us to believe the photo was taken—helpful for understanding the intent behind a fake.

The uncomfortable irony

I spent Thursday morning writing about newsrooms that failed to verify AI content before publishing it. I spent Thursday afternoon fixing my own verification tool because experts found it lacking.

The difference is what you do when someone points out the problem.

Denis could have just rolled his eyes. Ronan could have tweeted “lol this tool doesn’t work” and gotten some likes. Instead, they told me what was broken. That feedback made the tool better for every journalist who uses it next.

That’s the value of building verification tools in public. Every bug report closes a gap that misinformation could slip through.

What you can do

The tools will keep improving. But the core problem won’t disappear: AI detectors are built to catch fully fake images, and the fakes that fool people are rarely fully fake.

The best defense seems to be the oldest one — look at the image yourself, and ask if what you’re seeing makes sense.

But here’s what I’ve learned from building this cat-and-mouse tool: it helps when AI fights AI.

Traditional detectors analyze pixels and output a number. They don’t “see” melted faces or impossible architecture. But large language models do. Google Vision or Amazon Rekognition can look at an image and say: “The faces in the background are distorted. The lighting doesn’t match. This building has 10 floors but the town only has 4-story buildings.”

They notice what the math misses.

So my new approach isn’t just “run it through a detector.” It’s: let the detector do the math, then let an LLM look at the evidence. Calculator plus detective. That combination catches what neither could catch alone.

It’s not perfect. The fakers will adapt. But right now, the best weapon against AI-generated fakes might just be other AI — trained not to calculate, but to observe.

That’s exactly why I wrote this.

My shift in thinking is combining forensic analysis with LLM intelligence. The detector says 69%. The LLM says “those faces are melted.”

When you combine both, the threshold problem shrinks. A borderline score from the forensic detector gets overruled by visual evidence from the LLM. You’re not just doing better math — you’re adding judgment.

It’s still cat and mouse. But now the cat has two sets of eyes.

ImageWhisperer is free at imagewhisperer.org. If you find something that doesn’t work, tell me. That’s how this gets better. For more, see this manual on AI-detection.

The science behind this article

Everything described here is grounded in peer-reviewed research:

Composite Detection

Sensor Noise (PRNU): PRNU-based Image Forensics (PMC, 2025)

Edge Artifacts: Edge-Enhanced Transformer for Splicing Detection (2024)

Lighting Analysis: Detecting Inconsistencies in Lighting — achieves 92% accuracy

Threshold & Ensemble Methods

Multi-Model Fusion: Ensemble Methods for Synthetic Image Detection — 93.4% accuracy

LDM Detection: Detecting Diffusion-Generated Images (2024)

LDM & InVID Research: UvA Digital Methods Data Sprint on Synthetic Images

Face Detection in AI Images

Crowd Face Artifacts: Multi-Face Deepfake Detection (ICCV 2025)

Human vs Machine: Detecting Deepfakes (PNAS)

Tools

InVID-WeVerify Plugin — 155,000+ users

DeepfakeBench — Open benchmark

Henk van Ess teaches AI research workshops worldwide, and writes at Digital Digging.

Thanks to Denis Teyssou and the InVID-WeVerify team for the feedback that made this update possible. And to Ronan Le Nagard for breaking my tool in the most instructive way possible.

If more people wrote openly about the mistakes that define their progress, the world would be a better place.

This is the most reliable tool out there currently, even with its limitations. AI image generation is progressing so fast that 100% accuracy is impossible for any tool. Thanks for making it even better.